In the first part of the series on how to effectively monitor redis cluster stacks here, we had seen the first of the four types of scripts that can be useful for us. It also mentioned about the monitoring shell script running on all the systems and monitoring the health of redis on that particular system.

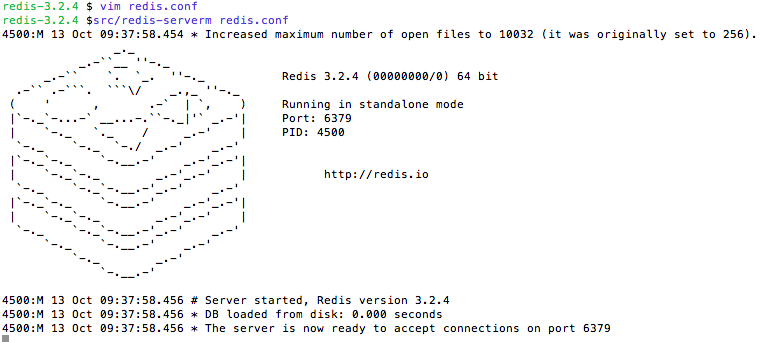

So, lets say we have the redis instance monitoring script (described in part 1) running on a particular redis server and checking every 2 minutes by crontab, that the redis server process is up on that machine.

But what happens if the machine itself is down. If that is the case, the cron will not run and we will not get the notification about redis nodes on that machine. :(

In this part, we will see how we can globally maintain a script to fix the above problem.

In this script, we use the "cluster info" command to find out whether the cluster is in OK state or not.

#!/bin/sh

erroringStacks=''

# it expects five arguments, 1st being the cluster identifier,

# 2nd n 3rd are IP port of a master in the cluster,

# 4th n 5th are the IP port of the slave of the master.

checkCluster() {

msg="Redis Cluster $1 $2 $3 $4 $5 "

# try to get the cluster state using the first IP port combination.

val=`redis-cli -c -h $2 -p $3 cluster info 2> /dev/null | grep 'cluster_state'`

if [[ $val == *"ok"* ]]

then

msg="$msg OK"

else

# if the first IP port did not give ok response,

# try to get the cluster state from the second IP port combination.

val=`redis-cli -c -h $4 -p $5 cluster info 2> /dev/null | grep 'cluster_state'`

if [[ $val == *"ok"* ]]

then

msg="$msg OK"

else

msg="$msg down"

erroringStacks="$erroringStacks $1"

fi

fi

}

# expects 3 args, 1st is stack identifier, 2nd,3rd are IP port

checkStandalone() {

msg="Redis Standalone $1 $2 $3 "

# try to set a simple value in the standalone instance, and get the output.

val=`redis-cli -h $2 -p $3 set healthcheck:abc def 2> /dev/null`

if [[ $val == *"OK"* ]]

then

msg="$msg OK"

else

msg="$msg down, not able to insert data"

erroringStacks="$erroringStacks $1"

fi

}

checkCluster C1 127.0.0.1 7000 127.0.0.1 7001

checkStandalone S1 127.0.0.1 6380

checkCluster C2 127.0.0.1 7010 127.0.0.1 7011

if [[ "$erroringStacks" == "" ]]

then

echo "all well"

else

echo "The following stacks are erroring out: $erroringStacks "

# send an email to the concerned team.

fi

erroringStacks=''

# it expects five arguments, 1st being the cluster identifier,

# 2nd n 3rd are IP port of a master in the cluster,

# 4th n 5th are the IP port of the slave of the master.

checkCluster() {

msg="Redis Cluster $1 $2 $3 $4 $5 "

# try to get the cluster state using the first IP port combination.

val=`redis-cli -c -h $2 -p $3 cluster info 2> /dev/null | grep 'cluster_state'`

if [[ $val == *"ok"* ]]

then

msg="$msg OK"

else

# if the first IP port did not give ok response,

# try to get the cluster state from the second IP port combination.

val=`redis-cli -c -h $4 -p $5 cluster info 2> /dev/null | grep 'cluster_state'`

if [[ $val == *"ok"* ]]

then

msg="$msg OK"

else

msg="$msg down"

erroringStacks="$erroringStacks $1"

fi

fi

}

# expects 3 args, 1st is stack identifier, 2nd,3rd are IP port

checkStandalone() {

msg="Redis Standalone $1 $2 $3 "

# try to set a simple value in the standalone instance, and get the output.

val=`redis-cli -h $2 -p $3 set healthcheck:abc def 2> /dev/null`

if [[ $val == *"OK"* ]]

then

msg="$msg OK"

else

msg="$msg down, not able to insert data"

erroringStacks="$erroringStacks $1"

fi

}

checkCluster C1 127.0.0.1 7000 127.0.0.1 7001

checkStandalone S1 127.0.0.1 6380

checkCluster C2 127.0.0.1 7010 127.0.0.1 7011

if [[ "$erroringStacks" == "" ]]

then

echo "all well"

else

echo "The following stacks are erroring out: $erroringStacks "

# send an email to the concerned team.

fi

The above script checks whether the various stacks are up or not.

For clusters, it gets the info from redis-cli cluster info command. Further, if a particular node specified is not up, it checks its slave to see that it is up, and the cluster info is ok. If both the master and slave specified are not OK, then the cluster is bound to be down and an error is returned. Note that the above method will not work for cluster if cluster-require-full-coverage is set to 'no' in redis.conf file, which means that the cluster will still be up even if some of its slots are not being served properly.

For standalone instance, it can get to check the state by inserting a dummy key value pair in redis. If the key value pair cannot be inserted, then the error will be thrown.

The same code can be done for multiple stacks by specifying a stack name which is the identifier for the stack. Here the cluster stacks are mentioned as C1, and C2 and standalone stacks is S1.

In case, the error is thrown, an appropriate alert can be generated.

This script can be scheduled in crontab and could run every fixed interval like 5 minutes.

*/5 * * * * sh /redis/monitorGlobalRedisStacks.sh

Also, ideally this script should run on more than 2-3 machines, atleast 1 of those should be guaranteed to be up.

This concludes our second part of this series. In the third part, we will see how we can have an hourly checking script on the threshold of various important params in redis servers, and have a mailer in case the threshold in memory, connection, replication is reached.

:)